Link to the repository: https://github.com/MartinTownley/33537140_AAP_Portfolio

Project 1: FM Soundscape

Audio Render on SoundCloud:

To Run:

If running on Mac, this project contains a shellscript to launch the program from the terminal: cd into the folder, and type “./run.sh”.

Description

This is a soundscape piece demonstrating FM synthesis in C++, using the Maximilian library. It attempts to explore the rich depths of sounds achievable through FM synthesis, possible with a very limited amount of code.

It consists of very simple components:

- a carrier sine wave, and two modulators (a sine wave and a phasor)

- a counter/clock

- a low-pass filter (to curb some of the high frequency content)

What’s going on?

A carrier wave (which in this case is a sine wave) is being modulated by another sine wave, whose frequency is determined by a harmonicity ratio of the carrier.

The amplitude of this first modulating wave is driven by a low-frequency phasor – this gives a a slow-paced tour through some of the sounds that can be achieved through FM synthesis.

The amplitude of this phasor is a fixed value.

Project 2: “The Break-Breaker” (Drum Sample-chopper)

This project demonstrates sample playback techniques and doppler-effect pitch shifting using Maximilian methods. It manipulates drum breaks by dividing the samples into an appropriate amount of start points, and triggering those start points in random sequences (the chopper() and sampleParamsUpadate() functions contain the code for this process). It contains 5 break samples to choose between (and one vocal), or you can edit the code to load your own.

The audio is passed through a “Zinger” effect, which is engaged by holding Q or W. It is essentially a very tight delay line in which a slow phasor is applied to the time of the delay, creating pitch “climbs” and “dives”.

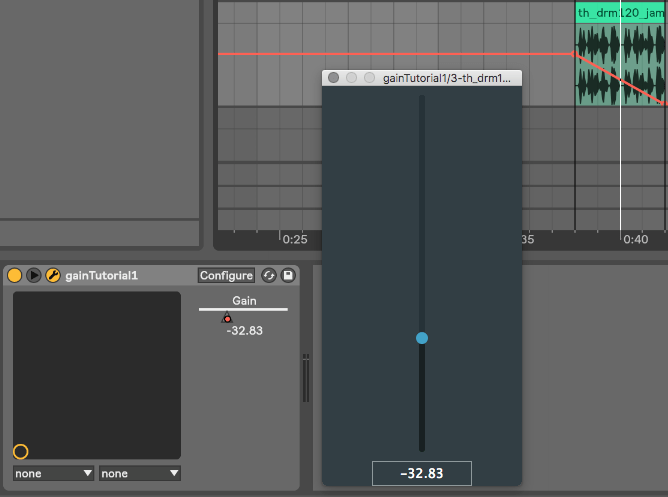

The sample playback speed and delay feedback can be controlled using the GUI.

Each drum break included in the data folder has its own amount of divisions, which is set when the sample is selected. These depend on how many beats-long the sample is, and what I thought sounded good. I attempted to allow the division number to be changed by a GUI while the program was running, but was unable to implement this smoothly.

Note: parts of this code were developed collaboratively with Callum Magill – namely the algorithms that chop up the sample and play it back at the correct speeds. (cmagi001@gold.ac.uk).

Project 3: Karplus-Strong Study

A simple interactive implementation of the Karplus-Strong theorem. The model can be excited by clicking the mouse – the delay time decreases as the mouse-position goes left to right, increasing the perceived pitch of the sound.

A filter is used to take some of the high end out of the initial noise burst. The frequency cutoff of the filter is scaled to a curve, to give more resolution in the low-end.

The OSC code in this project can be ignored – it was written for communication with a Max patch, since this provided a handy reference in the form of a GUI keyboard to experiment (but the Max patch is not necessary for this iteration).

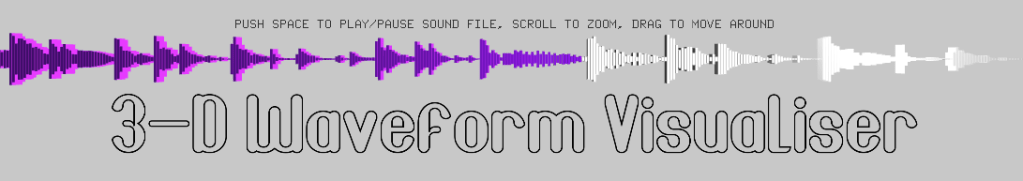

Project 4: 3-D Waveform Visualiser

This project is a prototype of a 3-D waveform visualiser, made in openFrameworks.

It takes data from a given audio file and pushes it into a 2-D vector in segments relative to the audio buffer size, where the outer vectors holds the segments, and the inner vectors contain the amplitude values.

The RMS of each block are calculated manually, and used to determine the height of the 3-D blocks that constitute the visual waveform. The static waveform of the whole audio file is drawn on setup, using a vector of pointers to a Block class.

Playing the audio file colours the waveform accordingly, so that the current play point is tracked. This was achieved by updating a counter each cycle of the audio loop, and using that number to update the blocks that are coloured.

This is a work in progress, as currently the program must be restarted for the visualiser to run again. There is also an issue with the playback – the playback of the audio file alternates between normal speed and half speed each time it loops. I couldn’t figure out why this was the case exactly, though it has something to do with the fact that the program calls “playOnce()” outside the audio loop, in order to retrieve the amplitude values. This somehow causes irregular playback of the file in the audio loop.

Ideally this program will have a scrubbing functionality, where the playhead can be moved by dragging the waveform with the mouse.